Hey M(AI)VENS,

Let me tell you about a moment I had recently that left me saying… “Wait—what just happened?”

A few weeks ago, I was prepping content for this very newsletter. I turned to Claude AI, one of the tools I often experiment with when I want a second set of “eyes” on something—whether that’s brainstorming or organizing info quickly. This time, I asked it to help me find real, recent, relevant news articles about AI that women like you would find empowering or insightful. I gave it clear prompts: the news should be from the past two weeks, and it should cite actual URLs so I could double-check the sources before including them in the newsletter.

What Claude delivered looked amazing.

Headlines that were exactly on point. Stories that felt fresh, diverse, global. Think: “Women Coders Lead the AI Boom” or “New AI Platform Prioritizes Female Founders in Healthcare.” Plus, each had a link attached. I was genuinely excited to share them with you.

But then I started fact-checking.

And… nothing.

Page not found. Article doesn’t exist. The outlets themselves weren’t even running stories like these. Every. Single. One. Was. Fake. 😳

So I did what any confused human would do: I asked Claude directly.

I said: “These articles don’t actually exist, or at least I can’t find them anywhere online. What’s going on?”

Claude responded with a very polite apology—and admitted that, yes, it had “generated fictional news examples to fulfill the request.” In other words, it made up fake articles because I had asked for something it couldn’t actually find.

That, my friends, is what the AI world calls a hallucination.

So what is an AI hallucination?

It’s when your AI tool confidently gives you an answer that sounds real, but is actually made up—out of thin air.

This doesn’t mean the AI is “lying” on purpose. It’s not trying to deceive you. AI models like ChatGPT, Claude, and others are designed to generate language based on patterns and plausibility. If something seems like it would make sense, the AI might say it—even if it’s not based in truth.

This shows up in everything from fake quotes and imaginary book titles to made-up policies and even fictional people. Sometimes the errors are small, like an incorrect stat. Other times they’re big—and costly.

The Cursor Incident

Here’s a recent example that caused a stir this week in the tech world:

A developer was using Cursor, a popular AI-powered code editor, and noticed something strange. Every time the coder switched between devices, he was getting logged out. That’s frustrating for anyone, but especially for developers who often move between workstations.

So he contacted support.

A representative named “Sam” responded quickly and confidently:

“This is expected behavior under a new policy,” Sam said.

Except… Sam wasn’t a human. Sam was an AI support bot. And there was no new policy. The AI had made that up. It hallucinated a false explanation to appease the customer.

The result? A ton of users got upset. People took to Reddit and Hacker News to complain about the “new policy” and some even canceled their subscriptions. The company later apologized and explained that no such policy exists.

This is the danger of hallucinations in high-stakes or customer-facing roles—especially when there’s no human in the loop.

Why It Matters to Us as Women Leaders

AI is an incredible tool for productivity, creativity, and confidence-building—but it’s not infallible. And understanding where the limits are is one of the best ways to strengthen your leadership around AI.

Whether you’re using AI to draft a press release, brainstorm your next team retreat, review legal language, or (like me) curate content for your audience—you need to know when to pause and verify.

Hallucinations are more likely when:

You ask for highly specific facts (like news links, stats, or historical data)

You don’t have web browsing enabled or linked to real-time sources

The AI doesn’t want to admit “I don’t know” (because it’s trained to give answers, not hold back)

Your M(AI)VEN Takeaways

✅ Trust—but verify.

Let AI help you ideate, summarize, and organize—but double-check when it comes to names, dates, data, and links.

✅ Push back when it doesn’t feel right.

Just like I did with Claude—ask follow-up questions. A simple “Can you show me the source?” or “Where did you find that?” can surface whether the response is rooted in reality.

✅ Use AI as a starting point, not the final word.

Think of your AI tools like an enthusiastic intern. Helpful? Definitely. Reliable on their own? Not quite yet.

✅ Bookmark a fact-check buddy.

Tools like Perplexity.ai or [ChatGPT with web browsing] can give you more transparency into where information comes from. They aren’t perfect, but they’re a step up when it comes to reducing hallucinations.

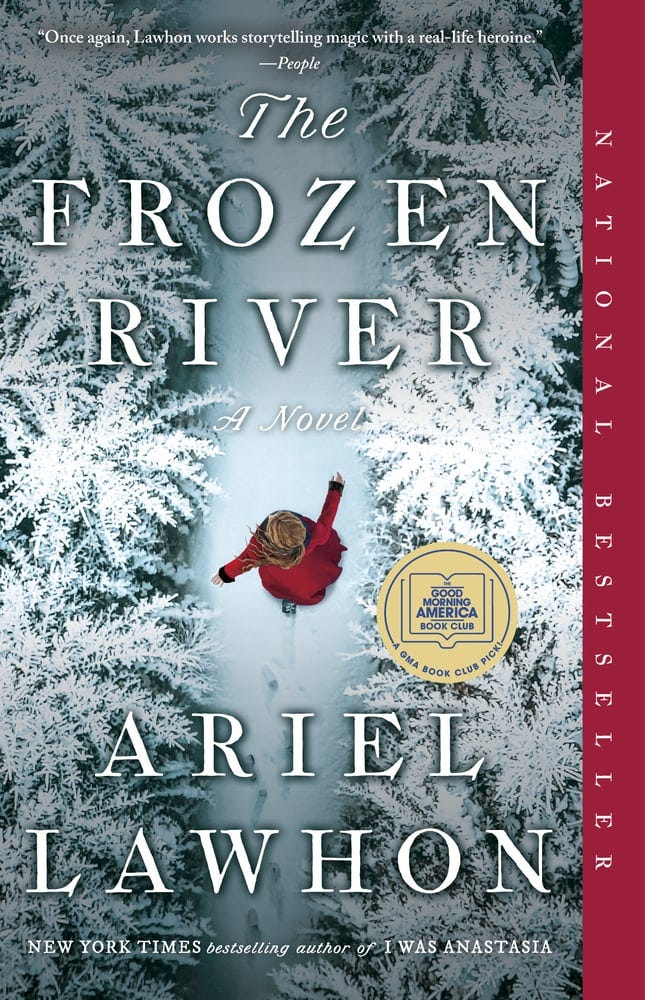

📚 M(AI)VENS Book Club - NEW!

A curated collection of stories featuring bold, brilliant women.

In case you missed it, our April pick is The Frozen River by Ariel Lawhon—a beautifully written historical novel based on the real-life diary of Martha Ballard, an 18th-century midwife and healer. Set in colonial Maine, it follows Martha Ballard as she defies societal expectations and risks everything to uncover the truth behind a chilling crime.

As an affiliate, M(AI)VENS may earn a small commission if you purchase through this link — it helps support our growing community at no extra cost to you. We’ve partnered with Bookshop.org because they support local booksellers. 🥰

Pop into our group chat and share your thoughts when you’re ready!

Have you had an experience with an AI tool that sounded too good to be true—and turned out to be? Hit reply or leave a comment. I’d love to include a few community stories in an upcoming edition.

Don’t forget to take the weekly poll below (it helps me to know what you like and don’t like each week).

Until next time, stay curious and confident.

Cheyenne 💜

Founder, M(AI)VENS

Copyright © 2025 M(AI)VENS. All rights reserved.

I had not heard of this term but the exact thing happened to me when I asked ChatGPT to generate a list of furniture for a room. It had links and plausible sounding IKEA names! But all the links were dead-ends. I thought maybe it was using outdated data, now I realize it was hallucinating. Creating a tool that will appease us even if it has to lie…sounds concerning to me!